Think back to the first time you sat in a plane as it left the ground, or a fast-moving elevator in a skyscraper. Most of us have a great deal of faith in the technology behind these common modes of transportation, but there was a time for both when it wasn’t so. Such is the case today with the relatively nascent autonomous vehicle technology. Will it ever be the case that a driver can fully rely on the vehicle systems to prevent all collisions? The answer is most likely yes, but it’s worth a look at the technologies that will operate, “below the surface,” to deliver that experience.

One of the main limitations of current self-driving technologies is that at present they rely too much on a single mode of perception – the sense of “sight.” Current systems emulate “sight”’ by using multiple line-of-sight sensors, such as cameras, LiDAR (used by Google) & RADAR, advanced computer vision processing and deep learning algorithms. But these are all sensors, and all line-of-sight technologies, which results in both conceptual and performance issues – they’re all the same approach to the problem, and even sensors get old, and malfunction.

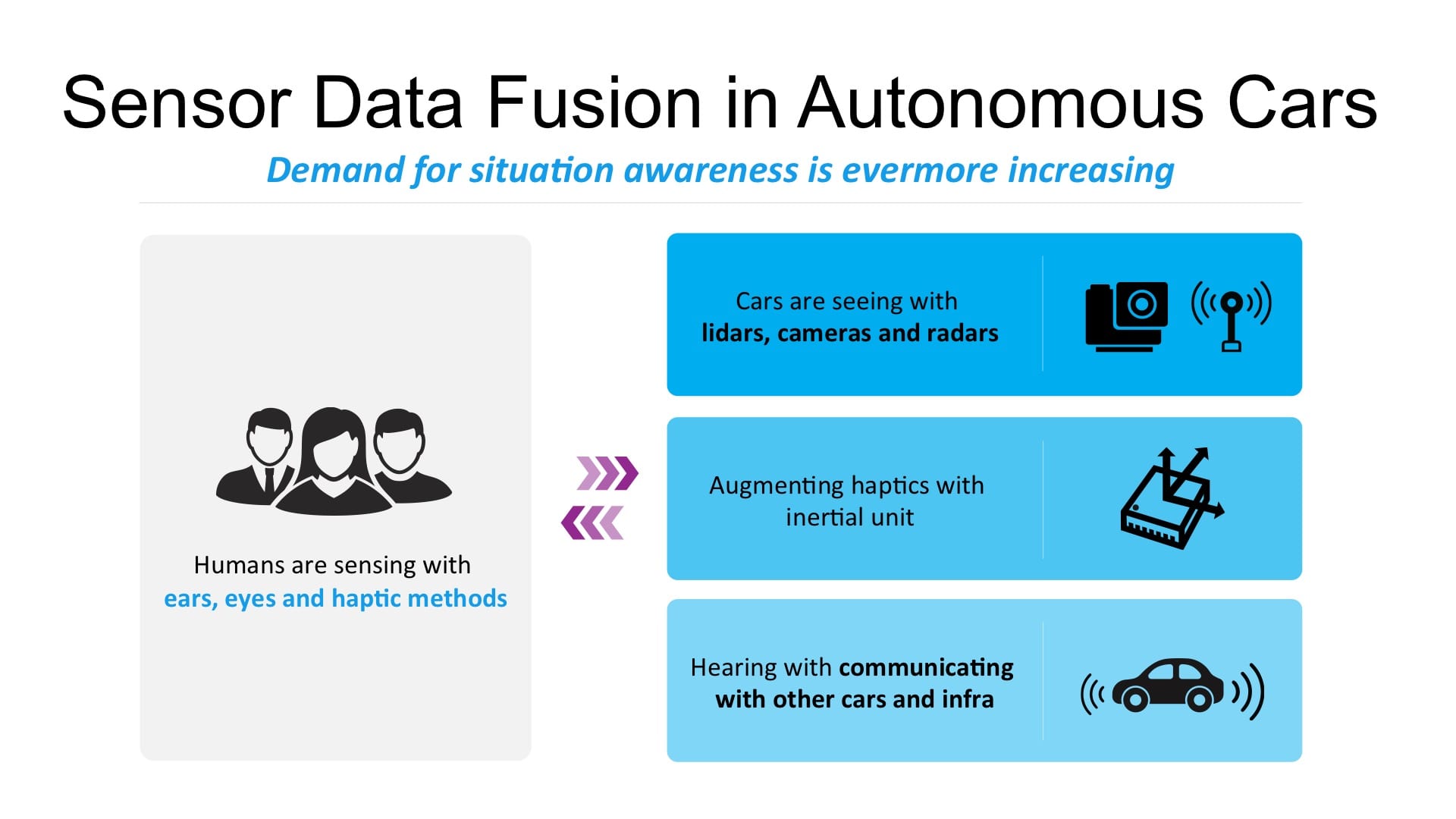

A better approach is to mimic, using technology, the human ability (though imperfect) to use multiple senses to perceive the environment and safely navigate their car from place to place. Our sense of sight lets us process enormous amounts of visual information every second (though sometimes through a less-than-perfect windshield). We also sense imperfections and disrepair in the road through vibration. We feel loss of traction on snowy and icy roads. And we use our hearing to perceive a distant ambulance siren or screeching brakes just out of our periphery.

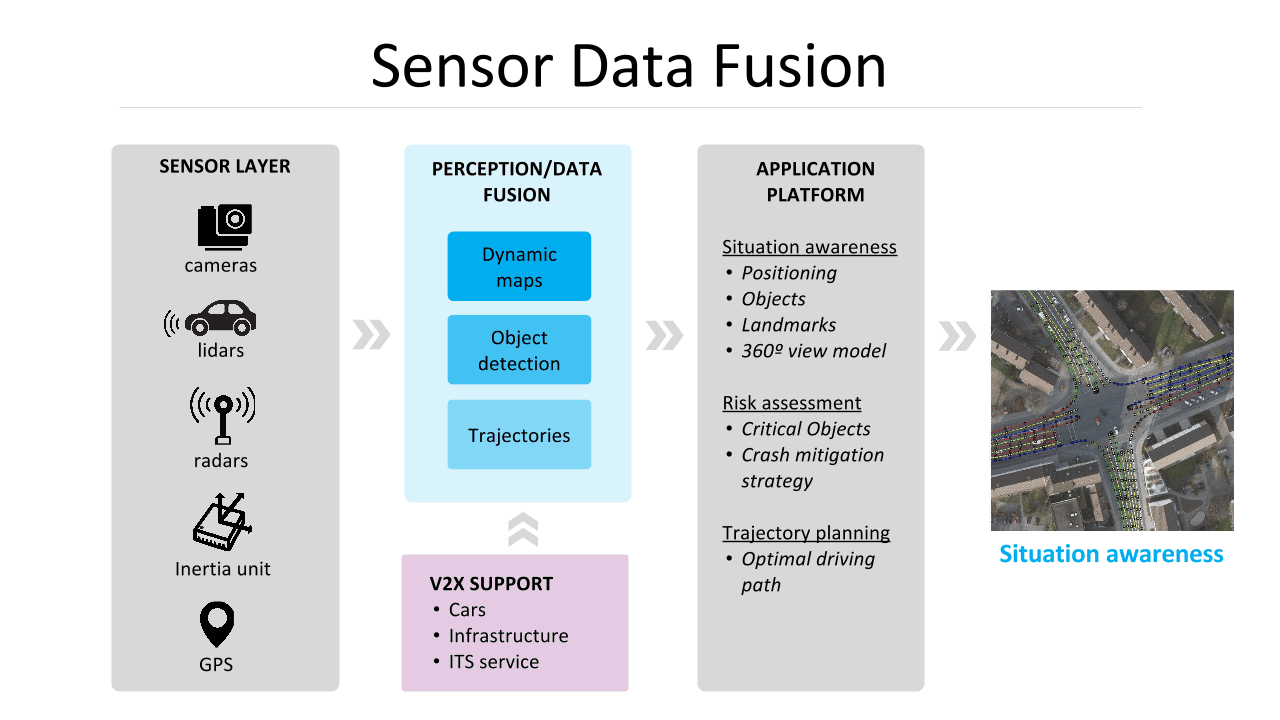

The technology corollary to this multi-sense approach is currently being investigated under a term coined collaborative sensor fusion. Collaborative sensor fusion in autonomous vehicles targets the three key areas that play into human situational awareness: ears, eyes and haptic methods. It combines information from multiple vehicles to create an additional layer of intelligence for autonomous vehicles to enhance the real-time situational awareness for the vehicle. Raw data from the line-of-sight sensors is combined with crowdsourced information from other vehicles (i.e., 3D precision maps, route information, etc.) and V2X communications (i.e., real-time messages from other cars (V2V), roadside infrastructure (V2I), pedestrians (V2P), etc.). This collaboration has the potential to prevent accidents and make more consumers feel comfortable making the leap into buying and using self-driving vehicles in the years to come.

Let’s explore why.

As human drivers know only too well, road conditions can shift suddenly to cause hazardous conditions, and objects or animals can appear out of nowhere. With collaborative sensor fusion, data generated from sensors on board one vehicle can be used to warn other vehicles on the road about these possible dangers. This crowdsourced information can be leveraged, locally and in the cloud, to build highly accurate, real-time precision maps, such as is being done by HERE, to augment the intelligence and real-time situational awareness for a safer autonomous driving experience.

V2X communications will be an important additional layer of safeguard. For example, digital signals transmitted from moving vehicles could enable a new form of ‘hearing’ for autonomous vehicles. So, if there was an object blocking a truck at an intersection or the truck was out of line-of-sight of these sensors, the physical sensors will not detect it, but if the truck transmits a V2V message that the approaching car can receive, the vehicle can still “hear” it (similar to hearing the “sounds of tires” even when you don’t see it).

Current and developing ITS standards, including SAE DSRC, ETSI ITS and upcoming 5G LTE, define framework, message definitions and performance requirements to support V2X communications. For example, in the DSRC context, the initial messaging format supports exchange of status information, i.e. each vehicle will periodically ping out a short range signal called Basic Safety Message (BSM), alerting other vehicles in the area where they are, what they’re going to do and what they have done. To support collaborative sensor fusion, advanced standards need to support exchanging on-board sensor information, such as from cameras and RADAR, enabling each vehicle to build an aggregated map of its environment, from its own sensors and sensors from surrounding vehicles, and detect hidden objects.

Another benefit of V2X communications is that it can be outfitted into older vehicles with after-market technology (using DSRC and/or LTE-V2X) that will allow the human-driven vehicle to communicate with autonomous vehicles, allowing those driverless vehicles to “hear” where other vehicles are so it can take appropriate action to navigate safely.

Based on the combination of the line-of-sight sensors, the crowdsourced information and the V2X communications, it is clear to see how collaborative sensor fusion creates an additional layer of intelligence for autonomous vehicles to enhance the real-time situational awareness for the vehicle. And how that real-time situational awareness comes into play in the presence of challenges, such as sensor malfunction, low visibility, sensor performance degradation, etc. However, if we’re ever going to have ubiquitous fully reliable autonomous vehicles, sensor data fusion via DSRC and current 4G LTE solutions may not be enough. We will have to look to 5G and more granular sensor collaboration systems to support more ultra-low latency requirements and real-time dynamic road conditions.

Progress in autonomous vehicle technology is progressing very rapidly. Currently, Tesla suggests to all drivers that they keep their hands on the steering wheel at all times, and is careful to use language like “driver-assist technology” rather than “self-driving” or “autonomous”. But only 5 years down the road, in 2021, Ford Motor Company expects to have fully autonomous vehicles with “no steering wheel, no gas pedals, no brake pedals… a driver will not be required.” That reality will be a triumph of sensor fusion, interacting with seamless, low-latency 5G networks – a “human” approach to the problem of getting humans where they’re going.